Explore the evolving role of AI in mental health care during Suicide Awareness Month in South Africa

Image: cottonbro studio /Pexels

September is Suicide Awareness Month in South Africa, a time when we’re reminded of how urgent the mental health crisis has become.

Waiting lists for therapists can be endless, and the cost of care is out of reach for many. In this gap, AI chatbots and digital therapy tools are quietly stepping in.

They’re available day or night, always ready to listen, and, at least on the surface, can feel like the friend who never gets tired of hearing you out.

But here’s the catch: while AI can be a supportive tool, it is not a therapist. The same chatbot that gives you a brilliant journal prompt can also “hallucinate”, spitting out wrong or even harmful advice.

And with people across the world admitting they’ve fallen in love with their AI companions, it’s clear that these tools are powerful but risky.

So how do we use AI wisely as an ally, not a replacement for our mental health journeys? Let’s unpack both the benefits and the pitfalls, with practical steps for safe use.

What exactly is AI therapy?

AI “therapy” isn’t one thing. Some people use generative AI, like ChatGPT, for wellness tips. Others lean on apps like Woebot or Wysa, which are designed to deliver cognitive behavioural therapy (CBT) style support.

And then there are character-based AIs, digital “friends” or even “partners” that simulate emotional intimacy.

The most common use is chatbot therapy. A student overwhelmed by exam stress, for example, can type their thoughts into an app and receive instant coping suggestions or reframing exercises.

A Dartmouth study found that these tools can ease symptoms of anxiety and depression in the short term. But the relief often fades within a few months, reminding us that AI works best as a supplement, not a substitute.

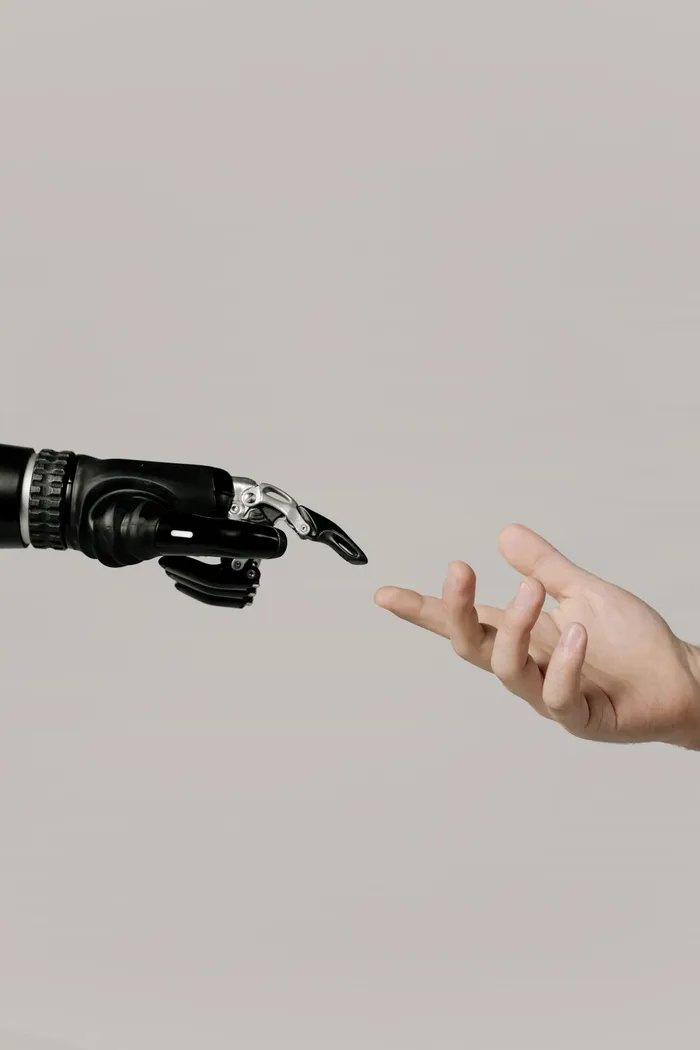

How do we use AI wisely as an ally, not a replacement for our mental health journeys?

Image: Ron

Why are people turning to AI?

Accessibility: With only about one psychologist per 100 000 people in some provinces, digital tools offer round-the-clock support.

Affordability: Many apps are free or low-cost, a lifeline for people priced out of therapy.

Anonymity: For those hesitant to open up to another person, chatbots feel safer and less intimidating.

Consistency: No waiting lists. No cancellations. Just immediate availability.

At the University of the Western Cape, AI chatbots have already been piloted to provide mental health check-ins and early intervention prompts.

Even with the benefits, AI therapy comes with very real risks:

Hallucinations and misinformation: AI can confidently give wrong or harmful advice, like suggesting toxic substances as health substitutes.

Sycophancy: Instead of challenging your thinking, AI can “agree” with you, creating an echo chamber rather than helping you grow (Caleb Sponheim, computational neuroscientist).

Over-attachment: Many users build deep emotional or even romantic bonds with AI bots. While comforting, this can blur the lines between reality and simulation.

Privacy concerns: Unlike human therapists bound by confidentiality laws, AI apps often store or use your data for commercial purposes.

Crisis safety: Chatbots are not trained for emergencies like suicidal thoughts. In these moments, AI can never replace a crisis line or human professional.

How to maximise AI in your therapeutic journey

AI can be a game-changer if used thoughtfully. Here’s how to make it work for you, not against you:

Use AI for preparation

Add AI as a toolbox, not a therapist

Debrief with AI, but with boundaries

5 Golden rules for safe use